Zebra

Zebra is a Zcash full node written in Rust.

Getting Started

You can run Zebra using our Docker image or you can install it manually.

Docker

This command will run our latest release, and sync it to the tip:

docker run zfnd/zebra:latest

For more information, read our Docker documentation.

Manual Install

Building Zebra requires Rust, libclang, and a C++ compiler. Below are quick summaries for installing these dependencies.

General Instructions for Installing Dependencies

- Install

cargoandrustc. - Install Zebra’s build dependencies:

- libclang, which is a library that comes under various names, typically

libclang,libclang-dev,llvm, orllvm-dev; - clang or another C++ compiler (

g++,which is for all platforms orXcode, which is for macOS); protoc(optional).

- libclang, which is a library that comes under various names, typically

Dependencies on Arch Linux

sudo pacman -S rust clang protobuf

Note that the package clang includes libclang as well. The GCC version on

Arch Linux has a broken build script in a rocksdb dependency. A workaround is:

export CXXFLAGS="$CXXFLAGS -include cstdint"

Once you have the dependencies in place, you can install Zebra with:

cargo install --locked zebrad

Alternatively, you can install it from GitHub:

cargo install --git https://github.com/ZcashFoundation/zebra --tag v2.5.0 zebrad

You can start Zebra by running

zebrad start

Refer to the Building and Installing Zebra and Running Zebra sections in the book for enabling optional features, detailed configuration and further details.

CI/CD Architecture

Zebra uses a comprehensive CI/CD system built on GitHub Actions to ensure code quality, maintain stability, and automate routine tasks. Our CI/CD infrastructure:

- Runs automated tests on every PR and commit.

- Manages deployments to various environments.

- Handles cross-platform compatibility checks.

- Automates release processes.

For a detailed understanding of our CI/CD system, including workflow diagrams, infrastructure details, and best practices, see our CI/CD Architecture Documentation.

Documentation

The Zcash Foundation maintains the following resources documenting Zebra:

-

The Zebra Book:

-

User guides of note:

- Zebra Health Endpoints — liveness/readiness checks for Kubernetes and load balancers

-

The documentation of the public APIs for the latest releases of the individual Zebra crates.

-

The documentation of the internal APIs for the

mainbranch of the whole Zebra monorepo.

User support

If Zebra doesn’t behave the way you expected, open an issue. We regularly triage new issues and we will respond. We maintain a list of known issues in the Troubleshooting section of the book.

If you want to chat with us, Join the Zcash Foundation Discord Server and find the “zebra-support” channel.

Security

Zebra has a responsible disclosure policy, which we encourage security researchers to follow.

License

Zebra is distributed under the terms of both the MIT license and the Apache License (Version 2.0). Some Zebra crates are distributed under the MIT license only, because some of their code was originally from MIT-licensed projects. See each crate’s directory for details.

See LICENSE-APACHE and LICENSE-MIT.

User Documentation

This section contains details on how to install, run, and instrument Zebra.

System Requirements

Zebra has the following hardware requirements.

Recommended Requirements

- 4 CPU cores

- 16 GB RAM

- 300 GB available disk space

- 100 Mbps network connection, with 300 GB of uploads and downloads per month

Minimum Hardware Requirements

- 2 CPU cores

- 4 GB RAM

- 300 GB available disk space

Zebra has successfully run on an Orange Pi Zero 2W with a 512 GB microSD card without any issues.

Disk Requirements

Zebra uses around 300 GB for cached Mainnet data, and 10 GB for cached Testnet data. We expect disk usage to grow over time.

Zebra cleans up its database periodically, and also when you shut it down or restart it. Changes are committed using RocksDB database transactions. If you forcibly terminate Zebra, or it panics, any incomplete changes will be rolled back the next time it starts. So Zebra’s state should always be valid, unless your OS or disk hardware is corrupting data.

Network Requirements and Ports

Zebra uses the following inbound and outbound TCP ports:

- 8233 on Mainnet

- 18233 on Testnet

If you configure Zebra with a specific

listen_addr,

it will advertise this address to other nodes for inbound connections. Outbound

connections are required to sync, inbound connections are optional. Zebra also

needs access to the Zcash DNS seeders, via the OS DNS resolver (usually port

53).

Zebra makes outbound connections to peers on any port. But zcashd prefers

peers on the default ports, so that it can’t be used for DDoS attacks on other

networks.

Typical Mainnet Network Usage

- Initial sync: 300 GB download. As already noted, we expect the initial download to grow.

- Ongoing updates: 10 MB - 10 GB upload and download per day, depending on user-created transaction size and peer requests.

Zebra needs some peers which have a round-trip latency of 2 seconds or less. If this is a problem for you, please open a ticket.

Platform Support

Support for different platforms are organized into three tiers, each with a different set of guarantees. For more information on the policies for platforms at each tier, see the Platform Tier Policy.

Platforms are identified by their Rust “target triple” which is a string composed by

<machine>-<vendor>-<operating system>.

Tier 1

Tier 1 platforms can be thought of as “guaranteed to work”. The Zebra project builds official binary releases for each tier 1 platform, and automated testing ensures that each tier 1 platform builds and passes tests after each change.

For the full requirements, see Tier 1 platform policy in the Platform Tier Policy.

| platform | os | notes | rust | artifacts |

|---|---|---|---|---|

x86_64-unknown-linux-gnu | Debian 13 | 64-bit | latest stable release | Docker |

aarch64-unknown-linux-gnu | Debian 13 | 64-bit ARM64 | latest stable release | Docker |

Tier 2

Tier 2 platforms can be thought of as “guaranteed to build”. The Zebra project builds in CI for each tier 2 platform, and automated builds ensure that each tier 2 platform builds after each change. Not all automated tests are run so it’s not guaranteed to produce a working build, and official builds are not available, but tier 2 platforms often work to quite a good degree and patches are always welcome!

For the full requirements, see Tier 2 platform policy in the Platform Tier Policy.

| platform | os | notes | rust | artifacts |

|---|---|---|---|---|

x86_64-unknown-linux-gnu | GitHub ubuntu-latest | 64-bit | latest stable release | N/A |

x86_64-unknown-linux-gnu | GitHub ubuntu-latest | 64-bit | latest beta release | N/A |

x86_64-apple-darwin | GitHub macos-latest | 64-bit | latest stable release | N/A |

x86_64-pc-windows-msvc | GitHub windows-latest | 64-bit | latest stable release | N/A |

Tier 3

Tier 3 platforms are those which the Zebra codebase has support for, but which the Zebra project does not build or test automatically, so they may or may not work. Official builds are not available.

For the full requirements, see Tier 3 platform policy in the Platform Tier Policy.

| platform | os | notes | rust | artifacts |

|---|---|---|---|---|

aarch64-apple-darwin | latest macOS | 64-bit, Apple M1 or M2 | latest stable release | N/A |

Platform Tier Policy

Table of Contents

General

The Zcash Foundation provides three tiers of platform support, modeled after the Rust Target Tier Policy:

- The Zcash Foundation provides no guarantees about tier 3 platforms; they may or may not build with the actual codebase.

- Zebra’s continuous integration checks that tier 2 platforms will always build, but they may or may not pass tests.

- Zebra’s continuous integration checks that tier 1 platforms will always build and pass tests.

Adding a new tier 3 platform imposes minimal requirements; but we focus primarily on avoiding disruption to ongoing Zebra development.

Tier 2 and tier 1 platforms place work on Zcash Foundation developers as a whole, to avoid breaking the platform. These tiers require commensurate and ongoing efforts from the maintainers of the platform, to demonstrate value and to minimize any disruptions to ongoing Zebra development.

This policy defines the requirements for accepting a proposed platform at a given level of support.

Each tier is based on all the requirements from the previous tier, unless overridden by a stronger requirement.

While these criteria attempt to document the policy, that policy still involves human judgment. Targets must fulfill the spirit of the requirements as well, as determined by the judgment of the Zebra team.

For a list of all supported platforms and their corresponding tiers (“tier 3”, “tier 2”, or “tier 1”), see platform support.

Note that a platform must have already received approval for the next lower tier, and spent a reasonable amount of time at that tier, before making a proposal for promotion to the next higher tier; this is true even if a platform meets the requirements for several tiers at once. This policy leaves the precise interpretation of “reasonable amount of time” up to the Zebra team.

The availability or tier of a platform in stable Zebra is not a hard stability guarantee about the future availability or tier of that platform. Higher-level platform tiers are an increasing commitment to the support of a platform, and we will take that commitment and potential disruptions into account when evaluating the potential demotion or removal of a platform that has been part of a stable release. The promotion or demotion of a platform will not generally affect existing stable releases, only current development and future releases.

In this policy, the words “must” and “must not” specify absolute requirements that a platform must meet to qualify for a tier. The words “should” and “should not” specify requirements that apply in almost all cases, but for which the Zebra team may grant an exception for good reason. The word “may” indicates something entirely optional, and does not indicate guidance or recommendations. This language is based on IETF RFC 2119.

Tier 3 platform policy

At this tier, the Zebra project provides no official support for a platform, so we place minimal requirements on the introduction of platforms.

- A tier 3 platform must have a designated developer or developers (the “platform maintainers”) on record to be CCed when issues arise regarding the platform. (The mechanism to track and CC such developers may evolve over time.)

- Target names should not introduce undue confusion or ambiguity unless absolutely necessary to maintain ecosystem compatibility. For example, if the name of the platform makes people extremely likely to form incorrect beliefs about what it targets, the name should be changed or augmented to disambiguate it.

- Tier 3 platforms must not impose burden on the authors of pull requests, or

other developers in the community, to maintain the platform. In particular,

do not post comments (automated or manual) on a PR that derail or suggest a

block on the PR based on a tier 3 platform. Do not send automated messages or

notifications (via any medium, including via

@) to a PR author or others involved with a PR regarding a tier 3 platform, unless they have opted into such messages. - Patches adding or updating tier 3 platforms must not break any existing tier 2 or tier 1 platform, and must not knowingly break another tier 3 platform without approval of either the Zebra team of the other tier 3 platform.

If a tier 3 platform stops meeting these requirements, or the platform maintainers no longer have interest or time, or the platform shows no signs of activity and has not built for some time, or removing the platform would improve the quality of the Zebra codebase, we may post a PR to remove support for that platform. Any such PR will be CCed to the platform maintainers (and potentially other people who have previously worked on the platform), to check potential interest in improving the situation.

Tier 2 platform policy

At this tier, the Zebra project guarantees that a platform builds, and will reject patches that fail to build on a platform. Thus, we place requirements that ensure the platform will not block forward progress of the Zebra project.

A proposed new tier 2 platform must be reviewed and approved by Zebra team based on these requirements.

In addition, the devops team must approve the integration of the platform into Continuous Integration (CI), and the tier 2 CI-related requirements. This review and approval may take place in a PR adding the platform to CI, or simply by a devops team member reporting the outcome of a team discussion.

- Tier 2 platforms must implement all the Zcash consensus rules. Other Zebra features and binaries may be disabled, on a case-by-case basis.

- A tier 2 platform must have a designated team of developers (the “platform maintainers”) available to consult on platform-specific build-breaking issues. This team must have at least 1 developer.

- The platform must not place undue burden on Zebra developers not specifically concerned with that platform. Zebra developers are expected to not gratuitously break a tier 2 platform, but are not expected to become experts in every tier 2 platform, and are not expected to provide platform-specific implementations for every tier 2 platform.

- The platform must provide documentation for the Zcash community explaining how to build for their platform, and explaining how to run tests for the platform. If at all possible, this documentation should show how to run Zebra programs and tests for the platform using emulation, to allow anyone to do so. If the platform cannot be feasibly emulated, the documentation should document the required physical hardware or cloud systems.

- The platform must document its baseline expectations for the features or versions of CPUs, operating systems, and any other dependencies.

- The platform must build reliably in CI, for all components that Zebra’s CI

considers mandatory.

- Since a working Rust compiler is required to build Zebra, the platform must be a Rust tier 1 platform.

- The Zebra team may additionally require that a subset of tests pass in CI. In particular, this requirement may apply if the tests in question provide substantial value via early detection of critical problems.

- Building the platform in CI must not take substantially longer than the current slowest platform in CI, and should not substantially raise the maintenance burden of the CI infrastructure. This requirement is subjective, to be evaluated by the devops team, and will take the community importance of the platform into account.

- Test failures on tier 2 platforms will be handled on a case-by-case basis.

Depending on the severity of the failure, the Zebra team may decide to:

- disable the test on that platform,

- require a fix before the next release, or

- remove the platform from tier 2.

- The platform maintainers should regularly run the testsuite for the platform, and should fix any test failures in a reasonably timely fashion.

- All requirements for tier 3 apply.

A tier 2 platform may be demoted or removed if it no longer meets these requirements. Any proposal for demotion or removal will be CCed to the platform maintainers, and will be communicated widely to the Zcash community before being dropped from a stable release. (The amount of time between such communication and the next stable release may depend on the nature and severity of the failed requirement, the timing of its discovery, whether the platform has been part of a stable release yet, and whether the demotion or removal can be a planned and scheduled action.)

Tier 1 platform policy

At this tier, the Zebra project guarantees that a platform builds and passes all tests, and will reject patches that fail to build or pass the testsuite on a platform. We hold tier 1 platforms to our highest standard of requirements.

A proposed new tier 1 platform must be reviewed and approved by the Zebra team based on these requirements. In addition, the release team must approve the viability and value of supporting the platform.

In addition, the devops team must approve the integration of the platform into Continuous Integration (CI), and the tier 1 CI-related requirements. This review and approval may take place in a PR adding the platform to CI, by a devops team member reporting the outcome of a team discussion.

- Tier 1 platforms must implement Zebra’s standard production feature set,

including the network protocol, mempool, cached state, and RPCs.

Exceptions may be made on a case-by-case basis.

- Zebra must have reasonable security, performance, and robustness on that platform. These requirements are subjective, and determined by consensus of the Zebra team.

- Internal developer tools and manual testing tools may be disabled for that platform.

- The platform must serve the ongoing needs of multiple production users of Zebra across multiple organizations or projects. These requirements are subjective, and determined by consensus of the Zebra team. A tier 1 platform may be demoted or removed if it becomes obsolete or no longer meets this requirement.

- The platform must build and pass tests reliably in CI, for all components that

Zebra’s CI considers mandatory.

- Test failures on tier 1 platforms will be handled on a case-by-case basis.

Depending on the severity of the failure, the Zebra team may decide to:

- disable the test on that platform,

- require a fix before the next release,

- require a fix before any other PRs merge, or

- remove the platform from tier 1.

- The platform must not disable an excessive number of tests or pieces of tests in the testsuite in order to do so. This is a subjective requirement.

- Test failures on tier 1 platforms will be handled on a case-by-case basis.

Depending on the severity of the failure, the Zebra team may decide to:

- Building the platform and running the testsuite for the platform must not take

substantially longer than other platforms, and should not substantially raise

the maintenance burden of the CI infrastructure.

- In particular, if building the platform takes a reasonable amount of time, but the platform cannot run the testsuite in a timely fashion due to low performance, that alone may prevent the platform from qualifying as tier 1.

- If running the testsuite requires additional infrastructure (such as physical

systems running the platform), the platform maintainers must arrange to provide

such resources to the Zebra project, to the satisfaction and approval of the

Zebra devops team.

- Such resources may be provided via cloud systems, via emulation, or via physical hardware.

- If the platform requires the use of emulation to meet any of the tier requirements, the Zebra team must have high confidence in the accuracy of the emulation, such that discrepancies between emulation and native operation that affect test results will constitute a high-priority bug in either the emulation, the Rust implementation of the platform, or the Zebra implementation for the platform.

- If it is not possible to run the platform via emulation, these resources must additionally be sufficient for the Zebra devops team to make them available for access by Zebra team members, for the purposes of development and testing. (Note that the responsibility for doing platform-specific development to keep the platform well maintained remains with the platform maintainers. This requirement ensures that it is possible for other Zebra developers to test the platform, but does not obligate other Zebra developers to make platform-specific fixes.)

- Resources provided for CI and similar infrastructure must be available for continuous exclusive use by the Zebra project. Resources provided for access by Zebra team members for development and testing must be available on an exclusive basis when in use, but need not be available on a continuous basis when not in use.

- All requirements for tier 2 apply.

A tier 1 platform may be demoted if it no longer meets these requirements but still meets the requirements for a lower tier. Any such proposal will be communicated widely to the Zcash community, both when initially proposed and before being dropped from a stable release. A tier 1 platform is highly unlikely to be directly removed without first being demoted to tier 2 or tier 3. (The amount of time between such communication and the next stable release may depend on the nature and severity of the failed requirement, the timing of its discovery, whether the platform has been part of a stable release yet, and whether the demotion or removal can be a planned and scheduled action.)

Raising the baseline expectations of a tier 1 platform (such as the minimum CPU features or OS version required) requires the approval of the Zebra team, and should be widely communicated as well.

Building and Installing Zebra

The easiest way to install and run Zebra is to follow the Getting Started section.

Building Zebra

If you want to build Zebra, install the build dependencies as described in the Manual Install section, and get the source code from GitHub:

git clone https://github.com/ZcashFoundation/zebra.git

cd zebra

You can then build and run zebrad by:

cargo build --release --bin zebrad

target/release/zebrad start

If you rebuild Zebra often, you can speed the build process up by dynamically linking RocksDB, which is a C++ dependency, instead of rebuilding it and linking it statically. If you want to utilize dynamic linking, first install RocksDB version >= 8.9.1 as a system library. On Arch Linux, you can do that by:

pacman -S rocksdb

On Ubuntu version >= 24.04, that would be

apt install -y librocksdb-dev

Once you have the library installed, set

export ROCKSDB_LIB_DIR="/usr/lib/"

and enjoy faster builds. Dynamic linking will also decrease the size of the

resulting zebrad binary in release mode by ~ 6 MB.

Building on ARM

If you’re using an ARM machine, install the Rust compiler for

ARM. If you build

Zebra using the x86_64 tools, it might run really slowly.

Build Troubleshooting

If you are having trouble with:

- clang: Install both

libclangandclang- they are usually different packages. - libclang: Check out the clang-sys documentation.

- g++ or MSVC++: Try using

clangorXcodeinstead. - rustc: Use the latest stable

rustcandcargoversions. - dependencies: Use

cargo installwithout--lockedto build with the latest versions of each dependency.

Optional Tor feature

The zebra-network/tor feature has an optional dependency named libsqlite3.

If you don’t have it installed, you might see errors like note: /usr/bin/ld: cannot find -lsqlite3. Follow the arti

instructions

to install libsqlite3, or use one of these commands instead:

cargo build

cargo build -p zebrad --all-features

Running Zebra

You can run Zebra as a backend for [lightwalletd][lwd], or a [mining][mining] pool.

[lwd]: https://zebra.zfnd.org/user/lightwalletd.html, [mining]: https://zebra.zfnd.org/user/mining.html.

For Kubernetes and load balancer integrations, Zebra provides simple HTTP health endpoints.

Optional Configs & Features

Zebra supports a variety of optional features which you can enable and configure manually.

Initializing Configuration File

The command below generates a zebrad.toml config file at the default location

for config files on GNU/Linux. The locations for other operating systems are

documented here.

zebrad generate -o ~/.config/zebrad.toml

The generated config file contains Zebra’s default options, which take place if no config is present. The contents of the config file is a TOML encoding of the internal config structure. All config options are documented here.

Configuring Progress Bars

Configure tracing.progress_bar in your zebrad.toml to show key metrics in

the terminal using progress bars. When progress bars are active, Zebra

automatically sends logs to a file. Note that there is a known issue where

progress bar estimates become extremely large. In future releases, the

progress_bar = "summary" config will show a few key metrics, and the

detailed config will show all available metrics. Please let us know which

metrics are important to you!

Custom Build Features

You can build Zebra with additional Cargo features:

prometheusfor Prometheus metricssentryfor Sentry monitoringelasticsearchfor experimental Elasticsearch support

You can combine multiple features by listing them as parameters of the

--features flag:

cargo install --features="<feature1> <feature2> ..." ...

The full list of all features is in the API documentation. Some debugging and monitoring features are disabled in release builds to increase performance.

Return Codes

0: Application exited successfully1: Application exited unsuccessfully2: Application crashedzebradmay also return platform-dependent codes.

Zebra with Docker

The foundation maintains a Docker infrastructure for deploying and testing Zebra.

Quick Start

To get Zebra quickly up and running, you can use an off-the-rack image from Docker Hub:

docker run --name zebra zfnd/zebra

If you want to preserve Zebra’s state, you can create a Docker volume:

docker volume create zebrad-cache

And mount it before you start the container:

docker run \

--mount source=zebrad-cache,target=/home/zebra/.cache/zebra \

--name zebra \

zfnd/zebra

You can also use docker compose, which we recommend. First get the repo:

git clone --depth 1 --branch v2.5.0 https://github.com/ZcashFoundation/zebra.git

cd zebra

Then run:

docker compose -f docker/docker-compose.yml up

Custom Images

If you want to use your own images with, for example, some opt-in compilation

features enabled, add the desired features to the FEATURES variable in the

docker/.env file and build the image:

docker build \

--file docker/Dockerfile \

--env-file docker/.env \

--target runtime \

--tag zebra:local \

.

Alternatives

See Building Zebra for more information.

Building with Custom Features

Zebra supports various features that can be enabled during build time using the FEATURES build argument:

For example, if we’d like to enable metrics on the image, we’d build it using the following build-arg:

Important

To fully use and display the metrics, you’ll need to run a Prometheus and Grafana server, and configure it to scrape and visualize the metrics endpoint. This is explained in more detailed in the Metrics section of the User Guide.

# Build with specific features

docker build -f ./docker/Dockerfile --target runtime \

--build-arg FEATURES="default-release-binaries prometheus" \

--tag zebra:metrics .

All available Cargo features are listed at https://docs.rs/zebrad/latest/zebrad/index.html#zebra-feature-flags.

Configuring Zebra

Zebra uses config-rs to layer configuration from defaults, an optional TOML file, and ZEBRA_-prefixed environment variables. When running with Docker, configure Zebra using any of the following (later items override earlier ones):

- Provide a specific config file path: Set the

CONFIG_FILE_PATHenvironment variable to point to your config file within the container. The entrypoint will pass it tozebradvia--config. - Use the default config file: Mount a config file to

/home/zebra/.config/zebrad.toml(for example using theconfigs:mapping indocker-compose.yml). This file is loaded ifCONFIG_FILE_PATHis not set. - Use environment variables: Set

ZEBRA_-prefixed environment variables to override settings from the config file. Examples:ZEBRA_NETWORK__NETWORK,ZEBRA_RPC__LISTEN_ADDR,ZEBRA_RPC__ENABLE_COOKIE_AUTH,ZEBRA_RPC__COOKIE_DIR,ZEBRA_METRICS__ENDPOINT_ADDR,ZEBRA_MINING__MINER_ADDRESS.

You can verify your configuration by inspecting Zebra’s logs at startup.

RPC

Zebra’s RPC server is disabled by default. Enable and configure it via the TOML configuration file, or configuration environment variables:

- Using a config file: Add or uncomment the

[rpc]section in yourzebrad.toml. Setlisten_addr(e.g.,"0.0.0.0:8232"for Mainnet). - Using environment variables: Set

ZEBRA_RPC__LISTEN_ADDR(e.g.,0.0.0.0:8232). To disable cookie auth, setZEBRA_RPC__ENABLE_COOKIE_AUTH=false. To change the cookie directory, setZEBRA_RPC__COOKIE_DIR=/path/inside/container.

Cookie Authentication:

By default, Zebra uses cookie-based authentication for RPC requests (enable_cookie_auth = true). When enabled, Zebra generates a unique, random cookie file required for client authentication.

-

Cookie Location: By default, the cookie is stored at

<cache_dir>/.cookie, where<cache_dir>is Zebra’s cache directory (for thezebrauser in the container this is typically/home/zebra/.cache/zebra/.cookie). -

Viewing the Cookie: If the container is running and RPC is enabled with authentication, you can view the cookie content using:

docker exec <container_name> cat /home/zebra/.cache/zebra/.cookie(Replace

<container_name>with your container’s name, typicallyzebraif using the defaultdocker-compose.yml). Your RPC client will need this value. -

Disabling Authentication: If you need to disable cookie authentication (e.g., for compatibility with tools like

lightwalletd):-

If using a config file, set

enable_cookie_auth = falsewithin the[rpc]section:[rpc] # listen_addr = ... enable_cookie_auth = false -

If using environment variables, set

ZEBRA_RPC__ENABLE_COOKIE_AUTH=false.

-

Remember that Zebra only generates the cookie file if the RPC server is enabled and enable_cookie_auth is set to true (or omitted, as true is the default).

Environment variable examples for health endpoints:

ZEBRA_HEALTH__LISTEN_ADDR=0.0.0.0:8080ZEBRA_HEALTH__MIN_CONNECTED_PEERS=1ZEBRA_HEALTH__READY_MAX_BLOCKS_BEHIND=2ZEBRA_HEALTH__ENFORCE_ON_TEST_NETWORKS=false

Health Endpoints

Zebra can expose two lightweight HTTP endpoints for liveness and readiness:

GET /healthy: returns200 OKwhen the process is up and has at least the configured number of recently live peers; otherwise503.GET /ready: returns200 OKwhen the node is near the tip and within the configured lag threshold; otherwise503.

Enable the endpoints by adding a [health] section to your config (see the default Docker config at docker/default-zebra-config.toml):

[health]

listen_addr = "0.0.0.0:8080"

min_connected_peers = 1

ready_max_blocks_behind = 2

enforce_on_test_networks = false

If you want to expose the endpoints to the host, add a port mapping to your compose file:

ports:

- "8080:8080" # Health endpoints (/healthy, /ready)

For Kubernetes, configure liveness and readiness probes against /healthy and /ready respectively. See the Health Endpoints page for details.

Examples

To make the initial setup of Zebra with other services easier, we provide some

example files for docker compose. The following subsections will walk you

through those examples.

Running Zebra with Lightwalletd

The following command will run Zebra with Lightwalletd:

docker compose -f docker/docker-compose.lwd.yml up

Note that Docker will run Zebra with the RPC server enabled and the cookie

authentication mechanism disabled when running docker compose -f docker/docker-compose.lwd.yml up, since Lightwalletd doesn’t support cookie authentication. In this

example, the RPC server is configured by setting ZEBRA_ environment variables

directly in docker/docker-compose.lwd.yml (or an accompanying .env file).

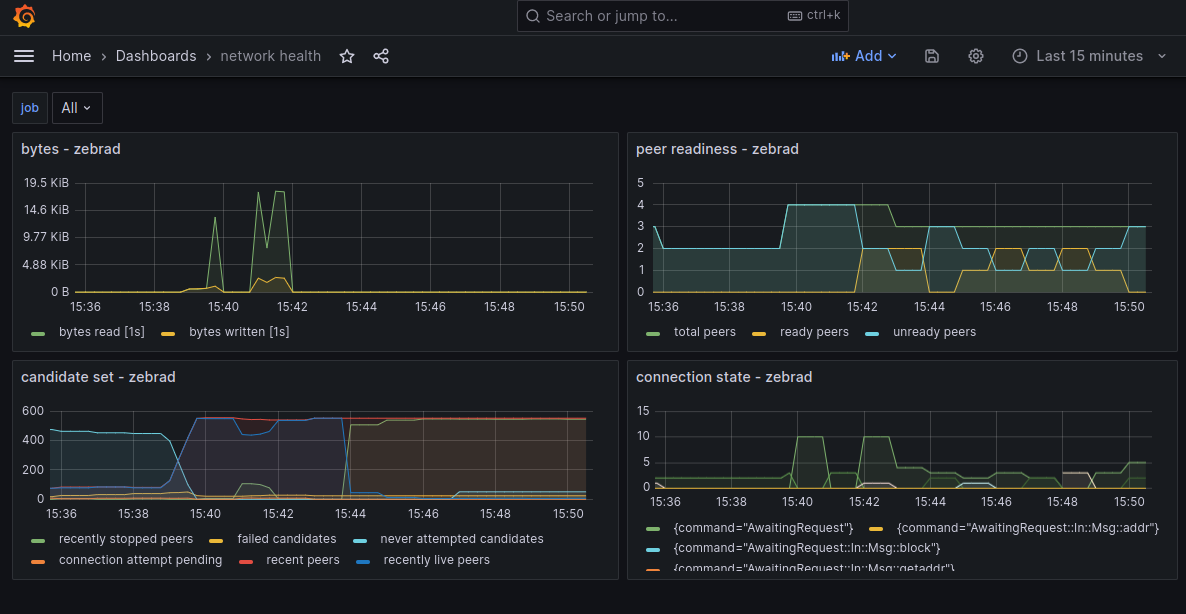

Running Zebra with Prometheus and Grafana

The following commands will run Zebra with Prometheus and Grafana:

docker compose -f docker/docker-compose.grafana.yml build --no-cache

docker compose -f docker/docker-compose.grafana.yml up

In this example, we build a local Zebra image with the prometheus Cargo

compilation feature. Note that we enable this feature by specifying its name in

the build arguments. Having this Cargo feature specified at build time makes

cargo compile Zebra with the metrics support for Prometheus enabled. Note that

we also specify this feature as an environment variable at run time. Having this

feature specified at run time makes Docker’s entrypoint script configure Zebra

to open a scraping endpoint on localhost:9999 for Prometheus.

Once all services are up, the Grafana web UI should be available at

localhost:3000, the Prometheus web UI should be at localhost:9090, and

Zebra’s scraping page should be at localhost:9999. The default login and

password for Grafana are both admin. To make Grafana use Prometheus, you need

to add Prometheus as a data source with the URL http://localhost:9090 in

Grafana’s UI. You can then import various Grafana dashboards from the grafana

directory in the Zebra repo.

Running CI Tests Locally

To run CI tests locally, first set the variables in the test.env file to

configure the tests, then run:

docker-compose -f docker/docker-compose.test.yml up

Tracing Zebra

Dynamic Tracing

Zebra supports dynamic tracing, configured using the config’s

TracingSection and an HTTP RPC endpoint.

Activate this feature using the filter-reload compile-time feature,

and the filter and endpoint_addr runtime config options.

If the endpoint_addr is specified, zebrad will open an HTTP endpoint

allowing dynamic runtime configuration of the tracing filter. For instance,

if the config had endpoint_addr = '127.0.0.1:3000', then

curl -X GET localhost:3000/filterretrieves the current filter string;curl -X POST localhost:3000/filter -d "zebrad=trace"sets the current filter string.

See the filter documentation for more details.

journald Logging

Zebra can send tracing spans and events to systemd-journald,

on Linux distributions that use systemd.

Activate journald logging using the journald compile-time feature,

and the use_journald runtime config option.

Flamegraphs

Zebra can generate flamegraphs of tracing spans.

Activate flamegraphs using the flamegraph compile-time feature,

and the flamegraph runtime config option.

Sentry Production Monitoring

Compile Zebra with --features sentry to monitor it using Sentry in production.

Zebra Metrics

Zebra has support for Prometheus, configured using the prometheus compile-time feature,

and the MetricsSection runtime configuration.

The following steps can be used to send real time Zebra metrics data into a grafana front end that you can visualize:

-

Build zebra with

prometheusfeature:cargo install --features prometheus --locked --git https://github.com/ZcashFoundation/zebra zebrad -

Create a

zebrad.tomlfile that we can edit:zebrad generate -o zebrad.toml -

Add

endpoint_addrto themetricssection:[metrics] endpoint_addr = "127.0.0.1:9999" -

Run Zebra, and specify the path to the

zebrad.tomlfile, for example:zebrad -c zebrad.toml start -

Install and run Prometheus and Grafana via Docker:

# create a storage volume for grafana (once) sudo docker volume create grafana-storage # create a storage volume for prometheus (once) sudo docker volume create prometheus-storage # run prometheus with the included config sudo docker run --detach --network host --volume prometheus-storage:/prometheus --volume /path/to/zebra/prometheus.yaml:/etc/prometheus/prometheus.yml prom/prometheus # run grafana sudo docker run --detach --network host --env GF_SERVER_HTTP_PORT=3030 --env GF_SERVER_HTTP_ADDR=localhost --volume grafana-storage:/var/lib/grafana grafana/grafanaNow the grafana dashboard is available at http://localhost:3030 ; the default username and password is

admin/admin. Prometheus scrapes Zebra onlocalhost:9999, and provides the results onlocalhost:9090. -

Configure Grafana with a Prometheus HTTP Data Source, using Zebra’s

metrics.endpoint_addr.In the grafana dashboard:

- Create a new Prometheus Data Source

Prometheus-Zebra - Enter the HTTP URL:

127.0.0.1:9090 - Save the configuration

- Create a new Prometheus Data Source

-

Now you can add the grafana dashboards from

zebra/docker/observability/grafana/dashboards/(Create > Import > Upload JSON File), or create your own.

Zebra Health Endpoints

zebrad can serve two lightweight HTTP endpoints for liveness and readiness checks.

These endpoints are intended for Kubernetes probes and load balancers. They are

disabled by default and can be enabled via configuration.

Endpoints

-

GET /healthy200 OK: process is up and has at least the configured number of recently live peers (default: 1)503 Service Unavailable: not enough peers

-

GET /ready200 OK: node is near the network tip and the estimated block lag is within the configured threshold (default: 2 blocks)503 Service Unavailable: still syncing or lag exceeds threshold

Configuration

Add a health section to your zebrad.toml:

[health]

listen_addr = "0.0.0.0:8080" # enable server; omit to disable

min_connected_peers = 1 # /healthy threshold

ready_max_blocks_behind = 2 # /ready threshold

enforce_on_test_networks = false # if false, /ready is always 200 on regtest/testnet

Config struct reference: components::health::Config.

Kubernetes Probes

Example Deployment probes:

livenessProbe:

httpGet:

path: /healthy

port: 8080

initialDelaySeconds: 10

periodSeconds: 10

readinessProbe:

httpGet:

path: /ready

port: 8080

initialDelaySeconds: 10

periodSeconds: 5

Security

- Endpoints are unauthenticated and return minimal plain text.

- Bind to an internal address and restrict exposure with network policies, firewall rules, or Service selectors.

Notes

- Readiness combines a moving‑average “near tip” signal with a hard block‑lag cap.

- Adjust thresholds based on your SLA and desired routing behavior.

Running lightwalletd with zebra

Zebra’s RPC methods can support a lightwalletd service backed by zebrad. We

recommend using

zcash/lightwalletd because we

use it in testing. Other lightwalletd forks have limited support, see the

Sync lightwalletd section for more info.

Note

You can also use

dockerto run lightwalletd with zebra. Please see our docker documentation for more information.

Contents:

Configure zebra for lightwalletd

We need a zebra configuration file. First, we create a file with the default settings:

zebrad generate -o ~/.config/zebrad.toml

The above command places the generated zebrad.toml config file in the default preferences directory of Linux. For other OSes default locations see here.

Tweak the following option in order to prepare for lightwalletd setup.

JSON-RPC

We need to configure Zebra to behave as an RPC endpoint. The standard RPC port for Zebra is:

8232for Mainnet, and18232for Testnet.

Starting with Zebra v2.0.0, a cookie authentication method like the one used by the zcashd node is enabled by default for the RPC server. However, lightwalletd currently does not support cookie authentication, so we need to disable this authentication method to use Zebra as a backend for lightwalletd.

For example, to use Zebra as a lightwalletd backend on Mainnet, give it this

~/.config/zebrad.toml:

[rpc]

# listen for RPC queries on localhost

listen_addr = '127.0.0.1:8232'

# automatically use multiple CPU threads

parallel_cpu_threads = 0

# disable cookie auth

enable_cookie_auth = false

WARNING: This config allows multiple Zebra instances to share the same RPC port. See the RPC config documentation for details.

Sync Zebra

With the configuration in place you can start synchronizing Zebra with the Zcash blockchain. This may take a while depending on your hardware.

zebrad start

Zebra will display information about sync process:

...

zebrad::commands::start: estimated progress to chain tip sync_percent=10.783 %

...

Until eventually it will get there:

...

zebrad::commands::start: finished initial sync to chain tip, using gossiped blocks sync_percent=100.000 %

...

You can interrupt the process at any time with ctrl-c and Zebra will resume the next time at around the block you were downloading when stopping the process.

When deploying for production infrastructure, the above command can be run as a service or daemon.

For implementing zebra as a service please see here.

Download and build lightwalletd

While you synchronize Zebra you can install lightwalletd.

Before installing, you need to have go in place. Please visit the go install page with download and installation instructions.

With go installed and in your path, download and install lightwalletd:

git clone https://github.com/zcash/lightwalletd

cd lightwalletd

make

make install

If everything went good you should have a lightwalletd binary in ~/go/bin/.

Sync lightwalletd

Please make sure you have zebrad running (with RPC endpoint and up to date blockchain) to synchronize lightwalletd.

-

lightwalletdrequires azcash.conffile, however this file can be empty if you are using the default Zebra rpc endpoint (127.0.0.1:8232) and thezcash/lightwalletdfork.- Some

lightwalletdforks also require arpcuserandrpcpassword, but Zebra ignores them if it receives them fromlightwalletd - When using a non-default port, use

rpcport=28232andrpcbind=127.0.0.1 - When using testnet, use

testnet=1

- Some

-

For production setups

lightwalletdrequires acert.pem. For more information on how to do this please see here. -

lightwalletdcan run without the certificate (with the--no-tls-very-insecureflag) however this is not recommended for production environments.

With the cert in ./ and an empty zcash.conf we can start the sync with:

lightwalletd --zcash-conf-path ~/.config/zcash.conf --data-dir ~/.cache/lightwalletd --log-file /dev/stdout

By default lightwalletd service will listen on 127.0.0.1:9067

Lightwalletd will do its own synchronization, while it is doing you will see messages as:

...

{"app":"lightwalletd","level":"info","msg":"Ingestor adding block to cache: 748000","time":"2022-05-28T19:25:49-03:00"}

{"app":"lightwalletd","level":"info","msg":"Ingestor adding block to cache: 749540","time":"2022-05-28T19:25:53-03:00"}

{"app":"lightwalletd","level":"info","msg":"Ingestor adding block to cache: 751074","time":"2022-05-28T19:25:57-03:00"}

...

Wait until lightwalletd is in sync before connecting any wallet into it. You will know when it is in sync as those messages will not be displayed anymore.

Run tests

The Zebra team created tests for the interaction of zebrad and lightwalletd.

To run all the Zebra lightwalletd tests:

- install

lightwalletd - install

protoc - build Zebra with

--features=lightwalletd-grpc-tests

Please refer to acceptance tests documentation in the Lightwalletd tests section. When running tests that use a cached lightwalletd state, the test harness will use a platform default cache directory (for example, ~/.cache/lwd on Linux) unless overridden via the LWD_CACHE_DIR environment variable.

Connect a wallet to lightwalletd

The final goal is to connect wallets to the lightwalletd service backed by Zebra.

For demo purposes we used zecwallet-cli with the adityapk00/lightwalletd fork. We didn’t test zecwallet-cli with zcash/lightwalletd yet.

Make sure both zebrad and lightwalletd are running and listening.

Download and build the cli-wallet

cargo install --locked --git https://github.com/adityapk00/zecwallet-light-cli

zecwallet-cli binary will be at ~/.cargo/bin/zecwallet-cli.

Run the wallet

$ zecwallet-cli --server 127.0.0.1:9067

Lightclient connecting to http://127.0.0.1:9067/

{

"result": "success",

"latest_block": 1683911,

"total_blocks_synced": 49476

}

Ready!

(main) Block:1683911 (type 'help') >>

Zebra zk-SNARK Parameters

The privacy features provided by Zcash are backed by different zk-snarks proving systems which are basically cryptographic primitives that allow a prover to convince a verifier that a statement is true by revealing no more information than the proof itself.

One of these proving systems is Groth16 and it is the one used by the Zcash transactions version 4 and greater. More specifically, in the sapling spend/output descriptions circuits and in the sprout joinsplits descriptions circuit.

https://zips.z.cash/protocol/protocol.pdf#groth

The Groth16 proving system requires a trusted setup, this is a set of predefined parameters that every node should possess to verify the proofs that will show up in the blockchain.

These parameters are built into the zebrad binary. They are predefined keys that will allow verification of the circuits. They were initially obtained by this process.

3 parameters are needed, one for each circuit, this is part of the Zcash consensus protocol:

https://zips.z.cash/protocol/protocol.pdf#grothparameters

Zebra uses the bellman crate groth16 implementation for all groth16 types.

Each time a transaction has any sprout joinsplit, sapling spend or sapling output these loaded parameters will be used for the verification process. Zebra verifies in parallel and by batches, these parameters are used on each verification done.

The first time any parameters are used, Zebra automatically parses all of the parameters. This work is only done once.

Mining Zcash with zebra

Zebra’s RPC methods support miners and mining pools.

Contents:

Download Zebra

The easiest way to run Zebra for mining is with our docker images.

If you have installed Zebra another way, follow the instructions below to start mining:

Configure zebra for mining

We need a configuration file. First, we create a file with the default settings:

mkdir -p ~/.config

zebrad generate -o ~/.config/zebrad.toml

The above command places the generated zebrad.toml config file in the default preferences directory of Linux. For other OSes default locations see here.

Tweak the following options in order to prepare for mining.

Miner address

Node miner address is required. At the moment zebra only allows p2pkh or p2sh transparent addresses.

[mining]

miner_address = 't3dvVE3SQEi7kqNzwrfNePxZ1d4hUyztBA1'

The above address is the ZF Mainnet funding stream address. It is used here purely as an example.

RPC section

This change is required for zebra to behave as an RPC endpoint. The standard port for RPC endpoint is 8232 on mainnet.

[rpc]

listen_addr = "127.0.0.1:8232"

Running zebra

If the configuration file is in the default directory, then zebra will just read from it. All we need to do is to start zebra as follows:

zebrad

You can specify the configuration file path with -c /path/to/config.file.

Wait until zebra is in sync, you will see the sync at 100% when this happens:

...

2023-02-21T18:41:09.088931Z INFO {zebrad="4daedbc" net="Main"}: zebrad::components::sync::progress: finished initial sync to chain tip, using gossiped blocks sync_percent=100.000% current_height=Height(1992055) network_upgrade=Nu5 remaining_sync_blocks=1 time_since_last_state_block=0s

...

Testing the setup

The easiest way to check your setup is to call the getblocktemplate RPC method and check the result.

Starting with Zebra v2.0.0, a cookie authentication method similar to the one used by the zcashd node is enabled by default. The cookie is stored in the default cache directory when the RPC endpoint starts and is deleted at shutdown. By default, the cookie is located in the cache directory; for example, on Linux, it may be found at /home/user/.cache/zebra/.cookie. You can change the cookie’s location using the rpc.cookie_dir option in the configuration, or disable cookie authentication altogether by setting rpc.enable_cookie_auth to false. The contents of the cookie file look like this:

__cookie__:YwDDua GzvtEmWG6KWnhgd9gilo5mKdi6m38v__we3Ko=

The password is an encoded, randomly generated string. You can use it in your call as follows:

$ curl --silent --data-binary '{"jsonrpc": "1.0", "id":"curltest", "method": "getblocktemplate", "params": [] }' -H 'Content-type: application/json' http://__cookie__:[email protected]:8232/ | jq

If you can see something similar to the following then you are good to go.

Click to see demo command output

{

"result": {

"capabilities": [

"proposal"

],

"version": 4,

"previousblockhash": "000000000173ae4123b7cb0fbed51aad913a736b846eaa9f23c3bb7f6c65b011",

"blockcommitmentshash": "84ac267e51ce10e6e4685955e3a3b08d96a7f862d74b2d60f141c8e91f1af3a7",

"lightclientroothash": "84ac267e51ce10e6e4685955e3a3b08d96a7f862d74b2d60f141c8e91f1af3a7",

"finalsaplingroothash": "84ac267e51ce10e6e4685955e3a3b08d96a7f862d74b2d60f141c8e91f1af3a7",

"defaultroots": {

"merkleroot": "5e312942e7f024166f3cb9b52627c07872b6bfa95754ccc96c96ca59b2938d11",

"chainhistoryroot": "97be47b0836d629f094409f5b979e011cbdb51d4a7e6f1450acc08373fe0901a",

"authdataroot": "dc40ac2b3a4ae92e4aa0d42abeea6934ef91e6ab488772c0466d7051180a4e83",

"blockcommitmentshash": "84ac267e51ce10e6e4685955e3a3b08d96a7f862d74b2d60f141c8e91f1af3a7"

},

"transactions": [

{

"data": "0400008085202f890120a8b2e646b5c5ee230a095a3a19ffea3c2aa389306b1ee3c31e9abd4ac92e08010000006b483045022100fb64eac188cb0b16534e0bd75eae7b74ed2bdde20102416f2e2c18638ec776dd02204772076abbc4f9baf19bd76e3cdf953a1218e98764f41ebc37b4994886881b160121022c3365fba47d7db8422d8b4a410cd860788152453f8ab75c9e90935a7a693535ffffffff015ca00602000000001976a914411d4bb3c17e67b5d48f1f6b7d55ee3883417f5288ac000000009d651e000000000000000000000000",

"hash": "63c939ad16ef61a1d382a2149d826e3a9fe9a7dbb8274bfab109b8e70f469012",

"authdigest": "ffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffff",

"depends": [],

"fee": 11300,

"sigops": 1,

"required": false

},

{

"data": "0400008085202f890192e3403f2fb04614a7faaf66b5f59a78101fe3f721aee3291dea3afcc5a4080d000000006b483045022100b39702506ff89302dcde977e3b817c8bb674c4c408df5cd14b0cc3199c832be802205cbbfab3a14e80c9765af69d21cd2406cea4e8e55af1ff5b64ec00a6df1f5e6b01210207d2b6f6b3b500d567d5cf11bc307fbcb6d342869ec1736a8a3a0f6ed17f75f4ffffffff0147c717a8040000001976a9149f68dd83709ae1bc8bc91d7068f1d4d6418470b688ac00000000000000000000000000000000000000",

"hash": "d5c6e9eb4c378c8304f045a43c8a07c1ac377ab6b4d7206e338eda38c0f196ba",

"authdigest": "ffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffff",

"depends": [],

"fee": 185,

"sigops": 1,

"required": false

},

{

"data": "0400008085202f8901dca2e357fe25c062e988a90f6e055bf72b631f286833bcdfcc140b47990e22cc040000006a47304402205423166bba80f5d46322a7ea250f2464edcd750aa8d904d715a77e5eaad4417c0220670c6112d7f6dc3873143bdf5c3652c49c3e306d4d478632ca66845b2bfae2a6012102bc7156d237dbfd2f779603e3953dbcbb3f89703d21c1f5a3df6f127aa9b10058feffffff23da1b0000000000001976a914227ea3051630d4a327bcbe3b8fcf02d17a2c8f9a88acc2010000000000001976a914d1264ed5acc40e020923b772b1b8fdafff2c465c88ac661c0000000000001976a914d8bae22d9e23bfa78d65d502fbbe32e56f349e5688ac02210000000000001976a91484a1d34e31feac43b3965beb6b6dedc55d134ac588ac92040000000000001976a91448d9083a5d92124e8c1b6a2d895874bb6a077d1d88ac78140000000000001976a91433bfa413cd714601a100e6ebc99c49a8aaec558888ac4c1d0000000000001976a91447aebb77822273df8c9bc377e18332ce2af707f488ac004c0000000000001976a914a095a81f6fb880c0372ad3ea74366adc1545490888ac16120000000000001976a914d1f2052f0018fb4a6814f5574e9bc1befbdfce9388acbfce0000000000001976a914aa052c0181e434e9bbd87566aeb414a23356116088ac5c2b0000000000001976a914741a131b859e83b802d0eb0f5d11c75132a643a488ac40240000000000001976a914c5e62f402fe5b13f31f5182299d3204c44fc2d5288ace10a0000000000001976a914b612ff1d9efdf5c45eb8e688764c5daaf482df0c88accc010000000000001976a9148692f64b0a1d7fc201d7c4b86f5a6703b80d7dfe88aca0190000000000001976a9144c998d1b661126fd82481131b2abdc7ca870edc088ac44020000000000001976a914bd60ea12bf960b3b27c9ea000a73e84bbe59591588ac00460000000000001976a914b0c711a99ff21f2090fa97d49a5403eaa3ad9e0988ac9a240000000000001976a9145a7c7d50a72355f07340678ca2cba5f2857d15e788ac2a210000000000001976a91424cb780ce81cc384b61c5cc5853585dc538eb9af88ac30430000000000001976a9148b9f78cb36e4126920675fe5420cbd17384db44288ac981c0000000000001976a9145d1c183b0bde829b5363e1007f4f6f1d29d3bb4a88aca0140000000000001976a9147f44beaacfb56ab561648a2ba818c33245b39dbb88acee020000000000001976a914c485f4edcefcf248e883ad1161959efc14900ddf88acc03a0000000000001976a91419bfbbd0b5f63590290e063e35285fd070a36b6a88ac98030000000000001976a9147a557b673a45a255ff21f3746846c28c1b1e53b988acdc230000000000001976a9146c1bf6a4e0a06d3498534cec7e3b976ab5c2dcbc88ac3187f364000000001976a914a1a906b35314449892f2e6d674912e536108e06188ace61e0000000000001976a914fcaafc8ae90ac9f5cbf139d626cfbd215064034888ace4020000000000001976a914bb1bfa7116a9fe806fb3ca30fa988ab8f98df94088ac88180000000000001976a9146a43a0a5ea2b421c9134930d037cdbcd86b9e84c88ac0a3c0000000000001976a91444874ae13b1fa73f900b451f4b69dbabb2b2f93788ac0a410000000000001976a914cd89fbd4f8683d97c201e34c8431918f6025c50d88ac76020000000000001976a91482035b454977ca675328c4c7de097807d5c842d688ac1c160000000000001976a9142c9a51e381b27268819543a075bbe71e80234a6b88ac70030000000000001976a914a8f48fd340da7fe1f8bb13ec5856c9d1f5f50c0388ac6c651e009f651e000000000000000000000000",

"hash": "2e9296d48f036112541b39522b412c06057b2d55272933a5aff22e17aa1228cd",

"authdigest": "ffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffff",

"depends": [],

"fee": 1367,

"sigops": 35,

"required": false

}

],

"coinbasetxn": {

"data": "0400008085202f89010000000000000000000000000000000000000000000000000000000000000000ffffffff050378651e00ffffffff04b4e4e60e0000000017a9140579e6348f398c5e78611da902ca457885cda2398738c94d010000000017a9145d190948e5a6982893512c6d269ea14e96018f7e8740787d010000000017a914931fec54c1fea86e574462cc32013f5400b8912987286bee000000000017a914d45cb1adffb5215a42720532a076f02c7c778c90870000000078651e000000000000000000000000",

"hash": "f77c29f032f4abe579faa891c8456602f848f423021db1f39578536742e8ff3e",

"authdigest": "ffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffff",

"depends": [],

"fee": -12852,

"sigops": 0,

"required": true

},

"longpollid": "0001992055c6e3ad7916770099070000000004516b4994",

"target": "0000000001a11f00000000000000000000000000000000000000000000000000",

"mintime": 1677004508,

"mutable": [

"time",

"transactions",

"prevblock"

],

"noncerange": "00000000ffffffff",

"sigoplimit": 20000,

"sizelimit": 2000000,

"curtime": 1677004885,

"bits": "1c01a11f",

"height": 1992056,

"maxtime": 1677009907

},

"id": "curltest"

}

Run a mining pool

Just point your mining pool software to the Zebra RPC endpoint (127.0.0.1:8232). Zebra supports the RPC methods needed to run most mining pool software.

If you want to run an experimental s-nomp mining pool with Zebra on testnet, please refer to this document for a very detailed guide. s-nomp is not compatible with NU5, so some mining functions are disabled.

If your mining pool software needs additional support, or if you as a miner need additional RPC methods, then please open a ticket in the Zebra repository.

How to mine with Zebra on testnet

Important

s-nomp has not been updated for NU5, so you’ll need the fixes in the branches below.

These fixes disable mining pool operator payments and miner payments: they just pay to the address configured for the node.

Install, run, and sync Zebra

-

Configure

zebrad.toml:- change the

network.networkconfig toTestnet - add your testnet transparent address in

mining.miner_address, or you can use the ZF testnet addresst27eWDgjFYJGVXmzrXeVjnb5J3uXDM9xH9v - ensure that there is an

rpc.listen_addrin the config to enable the RPC server - disable the cookie auth system by changing

rpc.enable_cookie_authtofalse

Example config:

[consensus] checkpoint_sync = true [mempool] eviction_memory_time = '1h' tx_cost_limit = 80000000 [metrics] [network] crawl_new_peer_interval = '1m 1s' initial_mainnet_peers = [ 'dnsseed.z.cash:8233', 'dnsseed.str4d.xyz:8233', 'mainnet.seeder.zfnd.org:8233', 'mainnet.is.yolo.money:8233', ] initial_testnet_peers = [ 'dnsseed.testnet.z.cash:18233', 'testnet.seeder.zfnd.org:18233', 'testnet.is.yolo.money:18233', ] listen_addr = '0.0.0.0:18233' network = 'Testnet' peerset_initial_target_size = 25 [rpc] debug_force_finished_sync = false parallel_cpu_threads = 1 listen_addr = '127.0.0.1:18232' enable_cookie_auth = false [state] cache_dir = '/home/ar/.cache/zebra' delete_old_database = true ephemeral = false [sync] checkpoint_verify_concurrency_limit = 1000 download_concurrency_limit = 50 full_verify_concurrency_limit = 20 parallel_cpu_threads = 0 [tracing] buffer_limit = 128000 force_use_color = false use_color = true use_journald = false [mining] miner_address = 't27eWDgjFYJGVXmzrXeVjnb5J3uXDM9xH9v' - change the

-

Run Zebra with the config you created:

zebrad -c zebrad.toml -

Wait for Zebra to sync to the testnet tip. This takes 8-12 hours on testnet (or 2-3 days on mainnet) as of October 2023.

Install s-nomp

General instructions with Debian/Ubuntu examples

Install dependencies

-

Install

redisand run it on the default port: https://redis.io/docs/getting-started/sudo apt-get install lsb-release curl -fsSL https://packages.redis.io/gpg | sudo gpg --dearmor -o /usr/share/keyrings/redis-archive-keyring.gpg echo "deb [signed-by=/usr/share/keyrings/redis-archive-keyring.gpg] https://packages.redis.io/deb $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/redis.list sudo apt-get update sudo apt-get install redis redis-server -

Install and activate a node version manager (e.g.

nodenvornvm) -

Install

boostandlibsodiumdevelopment librariessudo apt install libboost-all-dev sudo apt install libsodium-dev

Install s-nomp

-

git clone https://github.com/ZcashFoundation/s-nomp -

cd s-nomp -

Use the Zebra fixes:

git checkout zebra-mining -

Use node 10:

nodenv install 10 nodenv local 10or

nvm install 10 nvm use 10 -

Update dependencies and install:

export CXXFLAGS="-std=gnu++17" npm update npm install

Arch-specific instructions

Install s-nomp

-

Install Redis, and development libraries required by S-nomp

sudo pacman -S redis boost libsodium -

Install

nvm, Python 3.10 andvirtualenvparu -S python310 nvm sudo pacman -S python-virtualenv -

Start Redis

sudo systemctl start redis -

Clone the repository

git clone https://github.com/ZcashFoundation/s-nomp && cd s-nomp -

Use Node 10:

unset npm_config_prefix source /usr/share/nvm/init-nvm.sh nvm install 10 nvm use 10 -

Use Python 3.10

virtualenv -p 3.10 s-nomp source s-nomp/bin/activate -

Update dependencies and install:

npm update npm install

Run s-nomp

- Edit

pool_configs/zcash.jsonsodaemons[0].portis your Zebra port - Run

s-nompusingnpm start

Note: the website will log an RPC error even when it is disabled in the config. This seems like a s-nomp bug.

Install a CPU or GPU miner

Install dependencies

General instructions

- Install a statically compiled

boostandicu. - Install

cmake.

Arch-specific instructions

sudo pacman -S cmake boost icu

Install nheqminer

We’re going to install nheqminer, which supports multiple CPU and GPU Equihash

solvers, namely djezo, xenoncat, and tromp. We’re using tromp on a CPU

in the following instructions since it is the easiest to install and use.

git clone https://github.com/ZcashFoundation/nheqminercd nheqminer- Use the Zebra fixes:

git checkout zebra-mining - Follow the build instructions at https://github.com/nicehash/nheqminer#general-instructions, or run:

mkdir build

cd build

# Turn off `djezo` and `xenoncat`, which are enabled by default, and turn on `tromp` instead.

cmake -DUSE_CUDA_DJEZO=OFF -DUSE_CPU_XENONCAT=OFF -DUSE_CPU_TROMP=ON ..

make -j $(nproc)

Run miner

- Follow the run instructions at: https://github.com/nicehash/nheqminer#run-instructions

# you can use your own testnet address here

# miner and pool payments are disabled, configure your address on your node to get paid

./nheqminer -l 127.0.0.1:1234 -u tmRGc4CD1UyUdbSJmTUzcB6oDqk4qUaHnnh.worker1 -t 1

Notes:

- A typical solution rate is 2-4 Sols/s per core

nheqminersometimes ignores Control-C, if that happens, you can quit it using:killall nheqminer, or- Control-Z then

kill %1

- Running

nheqminerwith a single thread (-t 1) can help avoid this issue

Mining with Zebra in Docker

Zebra’s Docker images can be used for your mining operations. If you don’t have Docker, see the manual configuration instructions.

Using docker, you can start mining by running:

docker run --name -zebra_local -e MINER_ADDRESS="t3dvVE3SQEi7kqNzwrfNePxZ1d4hUyztBA1" -e ZEBRA_RPC_PORT=8232 -p 8232:8232 zfnd/zebra:latest

This command starts a container on Mainnet and binds port 8232 on your Docker host. If you want to start generating blocks, you need to let Zebra sync first.

Note that you must pass the address for your mining rewards via the

MINER_ADDRESS environment variable when you are starting the container, as we

did with the ZF funding stream address above. The address we used starts with

the prefix t1, meaning it is a Mainnet P2PKH address. Please remember to set

your own address for the rewards.

The port we mapped between the container and the host with the -p flag in the

example above is Zebra’s default Mainnet RPC port.

Instead of listing the environment variables on the command line, you can use

Docker’s --env-file flag to specify a file containing the variables. You can

find more info here

https://docs.docker.com/engine/reference/commandline/run/#env.

If you don’t want to set any environment variables, you can edit the

docker/default-zebra-config.toml file, and pass it to Zebra before starting

the container. There’s an example in docker/docker-compose.yml of how to do

that.

If you want to mine on Testnet, you need to set the ZEBRA_NETWORK__NETWORK environment

variable to Testnet and use a Testnet address for the rewards. For example,

running

docker run --name zebra_local -e ZEBRA_NETWORK__NETWORK="Testnet" -e MINER_ADDRESS="t27eWDgjFYJGVXmzrXeVjnb5J3uXDM9xH9v" -e ZEBRA_RPC_PORT=18232 -p 18232:18232 zfnd/zebra:latest

will start a container on Testnet and bind port 18232 on your Docker host, which

is the standard Testnet RPC port. Notice that we also used a different rewards

address. It starts with the prefix t2, indicating that it is a Testnet

address. A Mainnet address would prevent Zebra from starting on Testnet, and

conversely, a Testnet address would prevent Zebra from starting on Mainnet.

To connect to the RPC port, you will need the contents of the cookie

file

Zebra uses for authentication. By default, it is stored at

/home/zebra/.cache/zebra/.cookie. You can print its contents by running

docker exec -it zebra_local cat /home/zebra/.cache/zebra/.cookie

If you want to avoid authentication, you can turn it off by setting

[rpc]

enable_cookie_auth = false

in Zebra’s config file before you start the container.

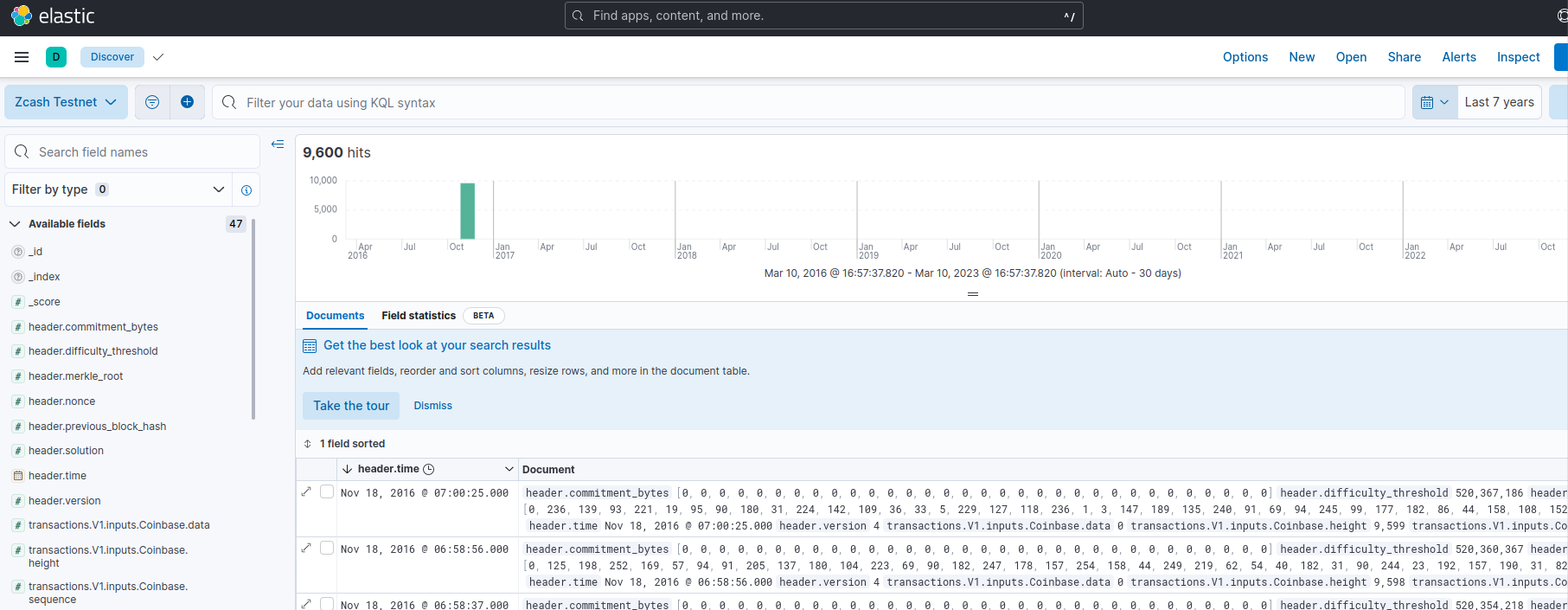

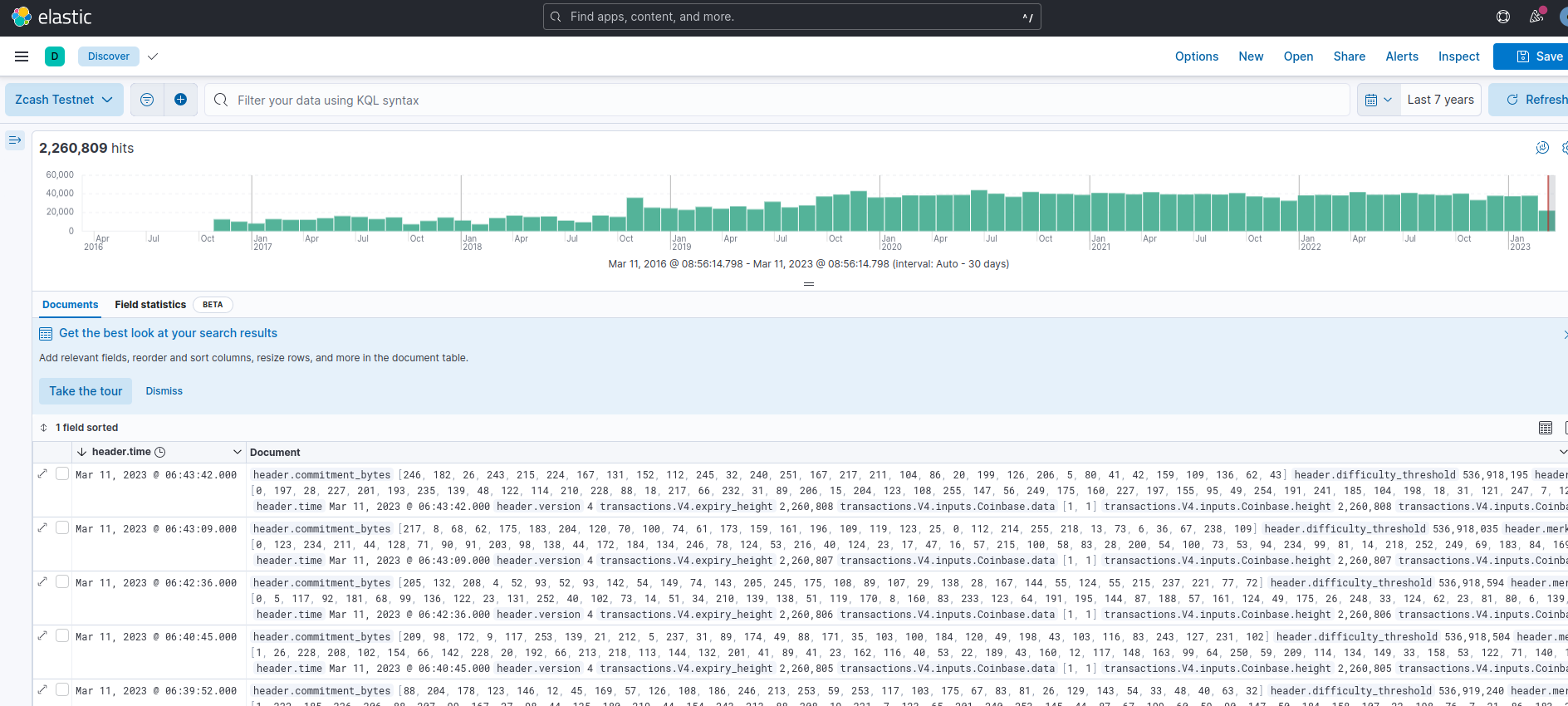

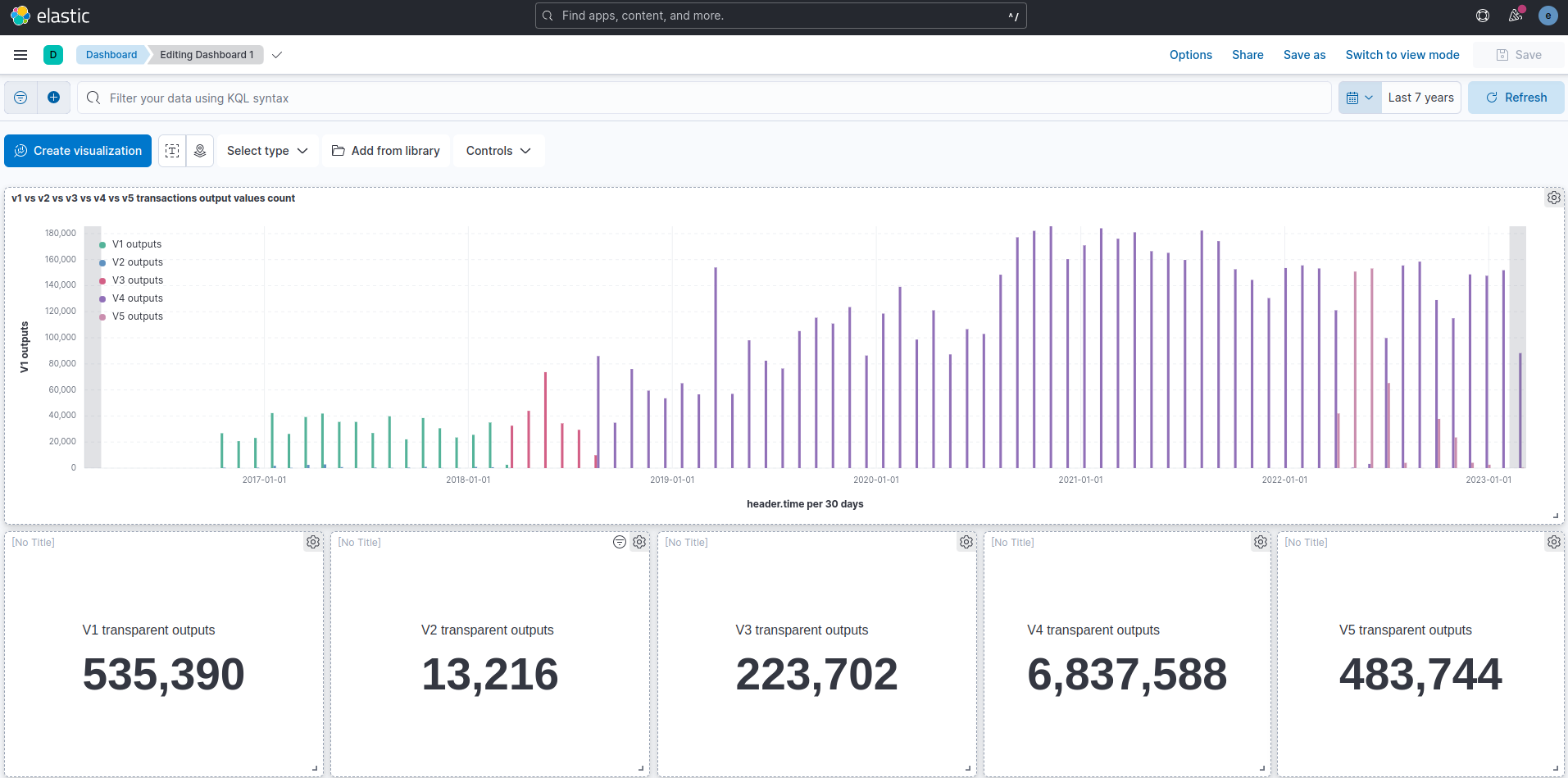

Kibana blockchain explorer

The goal here is to export block data from Zebra into an elasticsearch database and visualize it with the kibana front end.

Attention: This is an experimental feature tested only in the Zcash Testnet.

Elasticsearch support was introduced to Zebra in pull request #6274.

Download, build and run Elasticsearch

Installing elasticsearch is easy in linux and macOS by following the .tar.gz installation guide.

Make sure you end up with an elasticsearch binary. Run it:

./bin/elasticsearch

The first time you run the database, elastic password and the enrollment token for Kibana will displayed in the screen (See here). Please save these as you will need them.

Elasticsearch will listen in https://localhost:9200 by default.

Download, build and run Kibana

Installing kibana is also easy in linux and macOS following the .tar.gz installation guide.

Make sure you end up with a kibana binary and run it:

./bin/kibana

The first time you run kibana, it will provide a link for configuration:

Kibana has not been configured.

Go to http://localhost:5601/?code=405316 to get started.

Visit the url to get started, you will need the kibana enrollment token from elasticsearch and the elastic password from the previous step.

Kibana will listen in https://localhost:5601 by default.

You are now ready to start bumping data into elasticsearch with Zebra.

Download and build zebra with elasticsearch feature

Elasticsearch is an optional and experimental feature, we need to build and install with the elasticsearch rust feature enabled using the following command:

cargo install --features elasticsearch --locked --git https://github.com/ZcashFoundation/zebra zebrad

Zebra binary will be at ~/.cargo/bin/zebrad.

Configure Zebra for elasticsearch

Generate a configuration file with the default settings:

zebrad generate -o ~/.config/zebrad.toml

The following changes are needed:

network section

Change the network field to Testnet. The Mainnet should work but it is untested. Also ,the preferred p2p port for the testnet is 18233, so optionally change the listen_addr field to 0.0.0.0:18233.

state section

Add your elastic password generated when running the database for the first time into the elasticsearch_password field.

Run Zebra

If the config is in the default path then just running the binary will start the sync.

zebrad

Sync will take time, but you can use kibana to make sure blocks are being inserted during the process.

Visualize your data

As soon as the first batch of data is inserted from Zebra into elasticsearch database, an index zcash_testnet will be created.

To observe data, go to Analytics → Discover and create a new data view with the zcash_testnet index. Make sure you select header.time field as Timestamp field.

To see the data, use the calendar to get records for the last 10 years, the first blocks that are inserted will be very old blocks following the chain genesis.

After a while the chain will be in sync.

You can now use all the kibana features. For example, creating dashboards for specific data visualization.

Forking the Zcash Testnet with Zebra

The Zcash blockchain community consistently explores upgrades to the Zcash protocol, introducing new features to the consensus layer. This tutorial guides teams or individuals through forking the Zcash Testnet locally using Zebra, enabling testing of custom functionalities in a private testnet environment.

As of writing, the current network upgrade on the Zcash Testnet is Nu5. While a future upgrade (Nu6) activation height will be known later, for this tutorial, we aim to activate after Nu5, allowing us to observe our code crossing the network upgrade and continuing isolated.

To achieve this, we’ll use Zebra as the node, s-nomp as the mining pool, and nheqminer as the Equihash miner.

Note: This tutorial aims to remain generally valid after Nu6, with adjustments to the network upgrade name and block heights.

Requirements

- A modified Zebra version capable of syncing up to our chosen activation height, including the changes from the code changes step.

- Mining tools:

- s-nomp pool

- nheqminer

You may have two Zebra versions: one for syncing up to the activation height and another (preferably built on top of the first one) with the network upgrade and additional functionality.

Note: For mining setup please see How to mine with Zebra on testnet

Sync the Testnet to a Block after Nu5 Activation

Select a height for the new network upgrade after Nu5. In the Zcash public testnet, Nu5 activation height is 1_842_420, and at the time of writing, the testnet was at around block 2_598_958. To avoid dealing with checkpoints, choose a block that is not only after Nu5 but also in the future. In this tutorial, we chose block 2_599_958, which is 1000 blocks ahead of the current testnet tip.

Clone Zebra, create a config file, and use state.debug_stop_at_height to halt the Zebra sync after reaching our chosen network upgrade block height (2_599_958):

The relevant parts of the config file are:

[network]

listen_addr = "0.0.0.0:18233"

network = "Testnet"

[state]

debug_stop_at_height = 2599958

cache_dir = "/home/user/.cache/zebra"

Generate a Zebra config file:

zebrad generate -o myconf.toml`

Start Zebra with the modified config:

zebrad -c myconf.toml start

Wait for the sync to complete (this may take up to 24 hours, depending on testnet conditions), resulting in a state up to the desired block in ~/cache/zebra.

Code changes

We need to add the network upgrade variant to the zcash_primitives crate and Zebra.

librustzcash / zcash_primitives

Add the new network upgrade variant and a branch id among some changes needed for the library to compile. Here are some examples:

- Sample internal commit: Unclean test code

- Public PR adding Nu6 behind a feature: librustzcash PR #1048

After the changes, check that the library can be built with cargo build --release.

Zebra

Here we are making changes to create an isolated network version of Zebra. In addition to your own changes, this Zebra version needs to have the following:

-

Add a

Nu6variant to theNetworkUpgradeenum located inzebra-chain/src/parameters/network_upgrade.rs. -

Add consensus branch id, a random non-repeated string. We used

00000006in our tests when writing this tutorial. -

Point to the modified

zcash_primitivesinzebra-chain/Cargo.toml. In my case, I had to replace the dependency line with something like:zcash_primitives = { git = "https://github.com/oxarbitrage/librustzcash", branch = "nu6-test", features = ["transparent-inputs"] } -

Make fixes needed to compile.

-

Ignore how far we are from the tip in get block template:

zebra-rpc/src/methods/get_block_template_rpcs/get_block_template.rs

Unclean test commit for Zebra: Zebra commit

Make sure you can build the zebrad binary after the changes with zebra build --release

Configuration for isolated network

Now that you have a synced state and a modified Zebra version, it’s time to run your isolated network. Relevant parts of the configuration file:

Relevant parts of the configuration file:

[mempool]

debug_enable_at_height = 0

max_datacarrier_bytes = 83

[mining]

miner_address = 't27eWDgjFYJGVXmzrXeVjnb5J3uXDM9xH9v'

[network]

cache_dir = false

initial_testnet_peers = [

"dnsseed.testnet.z.cash:18233",

"testnet.seeder.zfnd.org:18233",

"testnet.is.yolo.money:18233",

]

listen_addr = "0.0.0.0:18233"

network = "Testnet"

[rpc]

listen_addr = "0.0.0.0:18232"

[state]

cache_dir = "/home/oxarbitrage/.cache/zebra"

debug_enable_at_height= 0enables the mempool independently of the tip height.- The

[mining]section is necessary for mining blocks, and the rpc endpointrpc.listen_addrtoo. initial_testnet_peersis needed as Zebra starts behind the fork block, approximately 100 blocks behind, so it needs to receive those blocks again. This is necessary until the new fork passes more than 100 blocks after the fork height. At that point, this network can be isolated, and initial_testnet_peers can be set to[].- Ensure your

state.cache_diris the same as when you saved state in step 1.

Start the chain with:

zebrad -c myconf.toml start

Start s-nomp:

npm start

Start the miner:

nheqminer -l 127.0.0.1:1234 -u tmRGc4CD1UyUdbSJmTUzcB6oDqk4qUaHnnh.worker1 -t 1

Confirm Forked Chain

After Zebra retrieves blocks up to your activation height from the network, the network upgrade will change, and no more valid blocks could be received from outside.

After a while, in s-nomp, you should see submitted blocks from time to time after the fork height.

...

2023-11-24 16:32:05 [Pool] [zcash_testnet] (Thread 1) Block notification via RPC after block submission

2023-11-24 16:32:24 [Pool] [zcash_testnet] (Thread 1) Submitted Block using submitblock successfully to daemon instance(s)

2023-11-24 16:32:24 [Pool] [zcash_testnet] (Thread 1) Block found: 0049f2daaaf9e90cd8b17041de0a47350e6811c2d0c9b0aed9420e91351abe43 by tmRGc4CD1UyUdbSJmTUzcB6oDqk4qUaHnnh.worker1

2023-11-24 16:32:24 [Pool] [zcash_testnet] (Thread 1) Block notification

...

You’ll also see this in Zebra:

...

2023-11-24T19:32:05.574715Z INFO zebra_rpc::methods::get_block_template_rpcs: submit block accepted block_hash=block::Hash("0084e1df2369a1fd5f75ab2b8b24472c49812669c812c7d528b0f8f88a798578") block_height="2599968"

2023-11-24T19:32:24.661758Z INFO zebra_rpc::methods::get_block_template_rpcs: submit block accepted block_hash=block::Hash("0049f2daaaf9e90cd8b17041de0a47350e6811c2d0c9b0aed9420e91351abe43") block_height="2599969"

...

Ignore messages in Zebra related to how far you are from the tip or network/system clock issues, etc.

Check that you are in the right branch with the curl command:

curl --silent --data-binary '{"jsonrpc": "1.0", "id":"curltest", "method": "getblockchaininfo", "params": [] }' -H 'Content-type: application/json' http://127.0.0.1:18232/ | jq

In the result, verify the tip of the chain is after your activation height for Nu6 and that you are in branch 00000006 as expected.

Final words

Next steps depend on your use case. You might want to submit transactions with new fields, accept those transactions as part of new blocks in the forked chain, or observe changes at activation without sending transactions. Further actions are not covered in this tutorial.

Custom Testnets

Custom Testnets in Zebra enable testing consensus rule changes on a public, configured Testnet independent of the default public Zcash Testnet.

Zebra’s Testnet can be configured with custom:

- Network upgrade activation heights,

- Network names,

- Network magics,

- Slow start intervals,

- Genesis hashes, and

- Target difficulty limits.

It’s also possible to disable Proof-of-Work validation by setting disable_pow to true so that blocks can be mined onto the chain without valid Equihash solutions, nor block hashes below their target difficulties.

Configuring any of those Testnet parameters except the network name with non-default values will result in an incompatible custom Testnet. Incompatible Testnets will fail to successfully complete peer handshakes with one another, or could provide one another with invalid blocks or invalid mempool transactions. Peer node connections that consistently provide invalid blocks or mempool transactions should be considered misbehaving peer connections and dropped.

All of these parameters are optional, if they are all omitted or set to their default values, Zebra will run on the default public Testnet.

Usage

In order to use a custom Testnet, Zebra must be configured to run on Testnet with non-default Testnet parameters. If the node is meant to mine blocks, it will need a [mining] section, and if it’s meant to mine blocks with non-coinbase transactions, it will also need the [rpc] section so the send_raw_transaction RPC method is available.

Relevant parts of the configuration file with practical Testnet parameters:

[mining]

miner_address = 't27eWDgjFYJGVXmzrXeVjnb5J3uXDM9xH9v'

[network]

network = "Testnet"

# No peers

initial_testnet_peers = []

[network.testnet_parameters]

network_name = "ConfiguredTestnet_1"

# The Testnet network magic is not reserved, but it's not recommended

# for use with incompatible Testnet parameters like those in this config.

network_magic = [0, 1, 0, 255]

slow_start_interval = 0

target_difficulty_limit = "0f0f0f0f0f0f0f0f0f0f0f0f0f0f0f0f0f0f0f0f0f0f0f0f0f0f0f0f0f0f0f0f"

disable_pow = true

# Configured activation heights must be greater than 0, and less than